Most of us humans take for granted a piece of technology like computers in this day and time. They are everywhere, in our desktops, laptops, mobile phones, cars, and the list goes on. These seemingly small machines have become essential in our day-to-day activities. Without them, the world we know and love would probably be unable to operate like how we used to see it.

Like every other invention, computers have a long history behind their development. The idea of computation dates back years ago, but its practical implementation only emerges in the recent decades. Being a universally used machine, there were tons of people working on the advancement of computers — each with a set of their own findings that helped improve its speed, efficiency, and capability.

Though different, their works have impacted and influenced the remaining history of computers as we know it today. From physical implementation, theoretical ideas, programming languages, you name it, these people worked on very diverse applications of a computer. If these puzzle pieces weren’t connected, today’s technological breakthroughs like blockchain, video games, the internet, including reading this very article, wouldn’t be possible.

So who were these influential people whose works impacted the development of computers? Before we dive into more niche and applied fields like PC gaming, let’s take a step back and start from the fundamentals. In no particular order, these are ten of the most influential people in the history of computer development.

Charles Babbage

One of the earliest mechanical implementations of a programmable computer was invented by Charles Babbage, a Lucasian Professor of Mathematics at Cambridge University. Initially, Babbage proposed the Difference Engine, a computing machine capable of generating mathematical tables. With the Difference Engine, the process of generating tables like logarithm tables, astronomical tables, and actuarial tables was easily done.

Soon enough, Babbage proposed the Analytical Engine, which is essentially a more general rendition of the Difference Engine. The capabilities of the Analytical Engine are far more versatile than its predecessor and the machine exhibits similar computing logic as modern computers can. It had a memory store, a central processing unit, conditional branching, and was controlled by a program of instructions.

Ada Lovelace

Working on top of Babbage’s previous findings, Ada Lovelace extrapolated that the Analytical Engine could be extended to applications beyond mere calculations. In fact, she expected that the machine might be able of composing “elaborate and scientific pieces of music of any degree of complexity or extent.” Moreover, she programmed one of the first algorithms for the Analytical Engine that produced Bernoulli numbers. Due to that, she is widely considered the first computer programmer.

The legacy that Ada Lovelace left was part amusing and part humorous at the same time. On one side, her idea of applying computers to more general fields via programs inspired a generation of programmers. On the other hand, despite her groundbreaking findings, she surprisingly had no scientific background, was a poet’s daughter, and saw herself as a fairy.

Joseph Marie Jacquard

Nowadays, the question regarding who the first programmer really is remained greatly debated. Some speculated that Ada Lovelace’s work wasn’t truly authentic and that Babbage had thought of the same, but his ideas never really got off the drawing board. But perhaps the person to be credited with the idea of creating a program of instructions is Joseph Marie Jacquard.

Jacquard developed a loom that used a punched card-based paper tape to regulate the pattern being woven. Indeed, it was Jacquard’s weaving loom idea that Babbage adopted into his design of programming the Analytical Engine. Later down in the timeline, punched cards became one of the earliest methods of programming a modern IBM computer.

Alan Mathison Turing

A more prominent character in the modern history of computer development is Alan Turing, famous for his Enigma Code. Aside from his commendable achievement, which arguably shaped the world’s history as we know it, Turing laid out the principles of a modern computer. The machine, known as a Turing machine, operated by manipulating symbols on a strip of tape according to a table of rules.

Aside from these fundamental computing concepts and breakthroughs in codebreaking, Turing contributed to and proposed ideas related to modern concepts of artificial intelligence and artificial life. His work extends to the famous Halting problem, Turing-completeness of programming languages, and many other intriguing ideas.

John von Neumann

Like Turing, von Neumann was a lead figure in the earliest days of modern computing. Early on in his life, von Neumann was already regarded as a child prodigy. Many even dubbed him as the last representative of the great mathematicians, given his ability to integrate pure and applied sciences.

A classic example of von Neumann’s contribution to computing included the merge sort, a sorting algorithm capable of sorting items in less than quadratic complexity time. This is only one instance of his works, but he worked on countless computing concepts such as the cellular automata, game theory, and artificial intelligence.

Gottfried Wilhelm Leibniz

We shall take a detour and move back in time, as we investigate a more fundamental idea of the binary system which builds into pretty much the rest of computing and programming history. Though studied independently by multiple people from multiple regions, Leibniz helped refined the binary number system that shaped digital computation that later influenced the works of von Neumann.

In case you’re unfamiliar with Leibniz, he too, is famous for the development of Calculus, independent of Isaac Newton. Nevertheless, his philosophical ideas transcended the mathematical field and into the field of computation, one of which included his dream of being able to boil reasoning down to computations. Unfortunately, it was Turing’s halting problem that ultimately deemed such a process to be impossible.

Blaise Pascal

Moving a further step backward in history, we find that the French mathematician, Blaise Pascal, invented a mechanical calculator known as Pascal’s calculator or Pascaline. The machine is capable of performing arithmetic calculations like addition and subtraction — two basic operations that could be extended to multiplication and division.

It is also this machine that influenced Leibniz’s ideas as well as calculators as simple as an office calculator in the years that came after. Further, it became the pioneer in the modern field of computer engineering as we know it and hence paved the way to designing a mechanical computation machine, or more simply, computers.

David John Wheeler

Now, we shall take a leap forward in time and jump to modern-day computers. Underneath the hood, the programs we run on our computers are processed by, well a processing unit, in a language of instructions that they understand. One major language which machines generally understand is the Assembly language, and Wheeler is one of the first creators of the language.

The Assembly language became the machine language in which higher-level languages like C and C++ are compiled into. Unlike high-level languages, the Assembly language might not be legible to the general public and resembles much closer to an architecture’s machine code instructions.

John Warner Backus

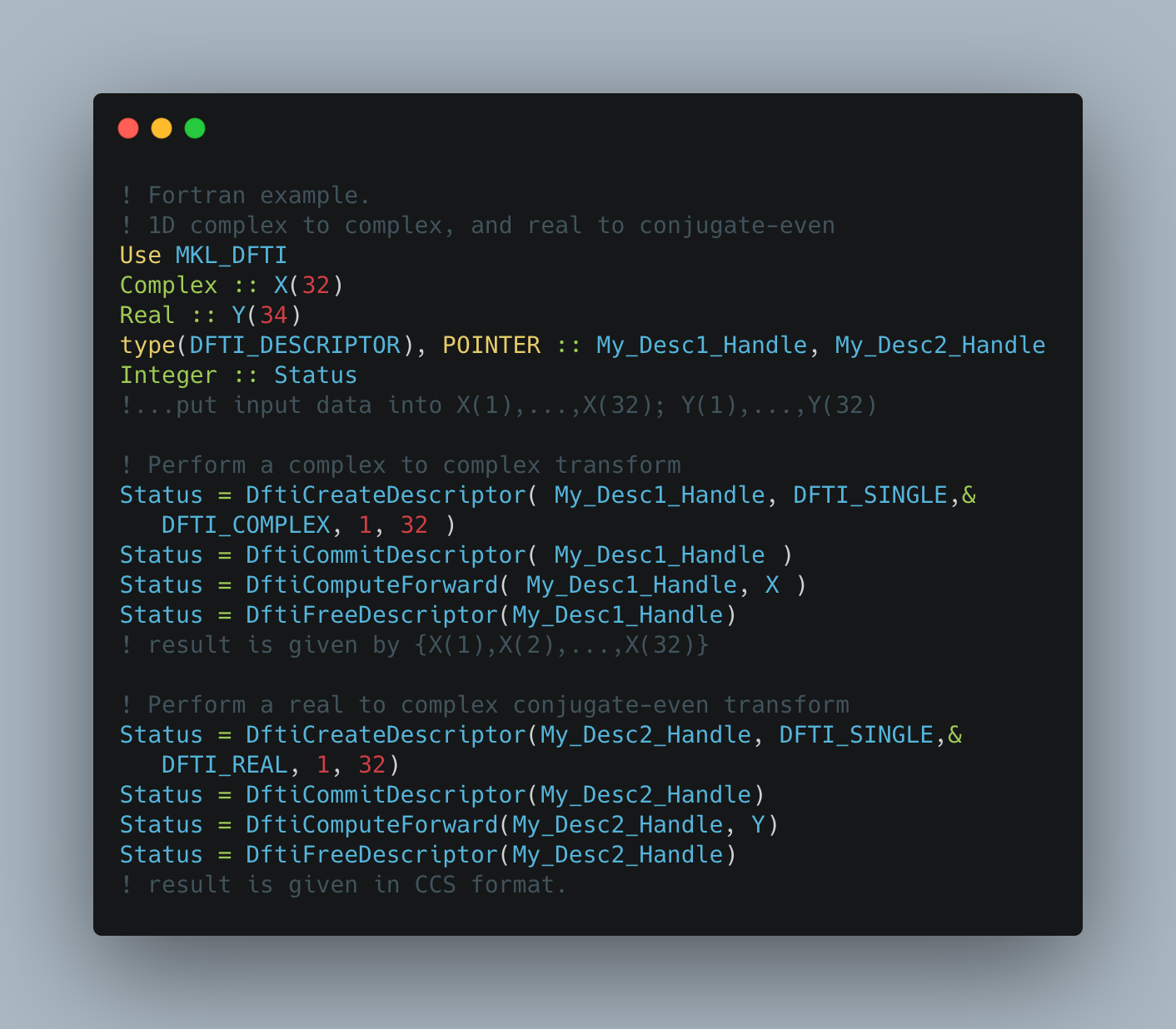

As we discussed earlier, the Assembly language isn’t the most convenient of a programming language. Instructions were very rigid and it was thus hard to produce algorithms relevant to simulating the physical world. John Warner Backus, who worked at IBM, proposed a more practical programming language known as Fortran.

Fortran provided a novel programming method that sits on top of Assembly language — the language that machines understand. To translate Fortran code to Assembly, it required another puzzle piece known as compilers. Indeed, Fortran’s creation was the advent of compilers, and that many higher-level programming languages like Lisp, C, and Java are derived from Fortran.

Dennis MacAlistair Ritchie

Finally, we shall conclude this list with a more modern programming language that still remains influential until today. Like Fortran, C is a higher-level programming language whose main focus lies on operating systems like Windows, Unix/Linux, and Mac OS. Together with Ken Thompson, Dennis Ritchie worked on the creation of C at Bell Laboratories.

Applications of the C programming language are virtually countless, not to mention other programming languages derived from C, like C++ and Objective-C. More importantly, though, modern electronics like your washing machine and smart fridge most likely runs on C, given their blazing speed. Despite its age, C ranks first in the measure of the popularity of programming languages according to the May 2021 TIOBE index.

Closing Remarks

These people weren’t the only influential ones which contributed to the development of computers. Countless people should be on the list, but we only have so much time in our hands. I greatly suggest reading deeper if you’re interested in this topic of computing, as so much has been invested in this unique field.

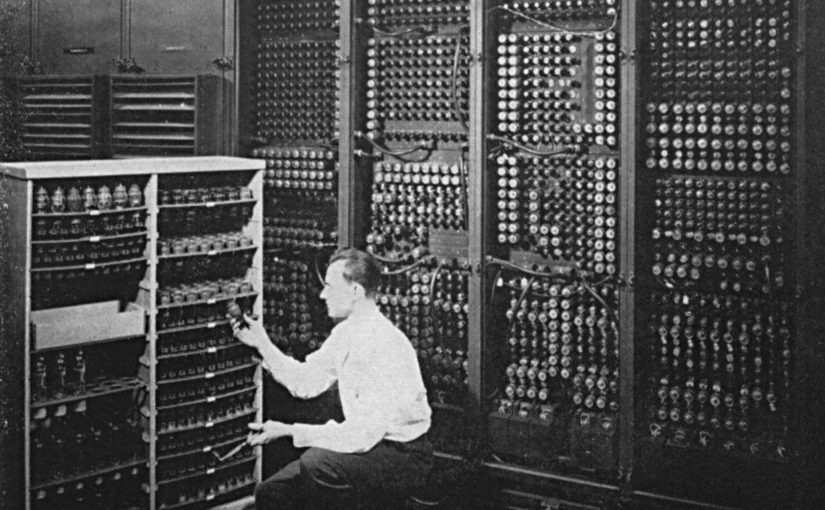

Featured Image by Wikimedia Commons.